Thinking about using Google Dataproc as your cloud vendor? We can see why. Google Dataproc is a powerful tool for analytics and data processing, but to get the most out of it you have to ensure you use it properly. We’re going to explore five best practices you can use to lower your Google cloud costs while maximizing efficiency:

- Using Google cloud storage

- Using the jobs API to submit jobs quicker

- Specifying your Dataproc cluster image version

- Being prepared for errors to occur and

- Keeping up to date on the platform

Following these tips will ensure the best performance and help keep your cloud costs in line. But before diving into the best practices, let’s go over a few of the benefits that come with using Google Dataproc.

Why Use Google Dataproc?

There are a lot of cloud vendors to choose from. When it comes to Dataproc, there are three key features that make it stand out:

1. Ease of use. It takes just 5-30 minutes to create Hadoop or Spark clusters, and 90 seconds to startup, scale, or shut down the cluster.

2. Pay-as-you-go model. Instead of getting a surprise bill at the end of each month, you’re invoiced when your code is executed.

3. Hardware options. Google Dataproc supports different instance types, or preemptible virtual machines, so you can leverage the ideal server sizes that fit your needs.

Those are just three of the many reasons you might choose to use Google Dataproc as your cloud vendor. But once you’ve made your decision, how do you improve the performance of the big data applications running on your new platform to lower costs? That’s where tried and true best practices come in.

Five Best Practices to Lower Your Google Cloud Costs on Dataproc

1. Use Google Cloud Storage

Google cloud storage is designed for scalability and performance. Unlike using HDFS, you can store any amount of data and retrieve any amount of data as needed—without adding compute. Because of the lack of limitations Google cloud storage has compared to HDFS, it’s quickly overtaking HDFS deployments.

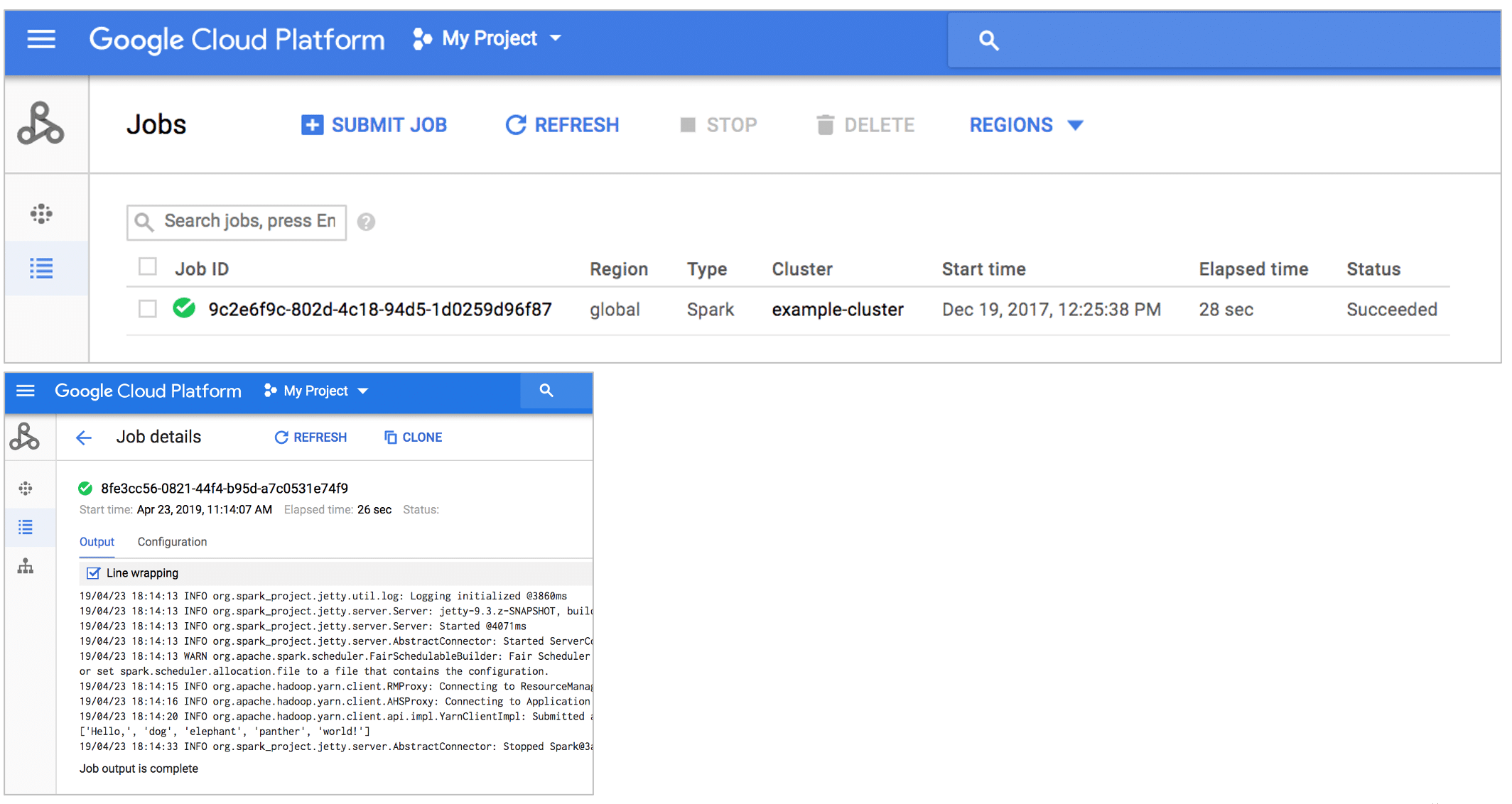

2. Use the Jobs API to Submit Jobs Quicker

One of the learning curves that comes with moving to the cloud is the security frameworks that need to be assigned. Using the jobs API smooths the onboarding process and simplifies the security for the job submission process by tailoring a security policy to a job. This eliminates the need for setting up gateway nodes and allows you to submit jobs quicker.

Submit jobs to a cluster using a jobs.submit call over HTTP with the gcloud command-line tool

or via the Google Cloud Platform Console.

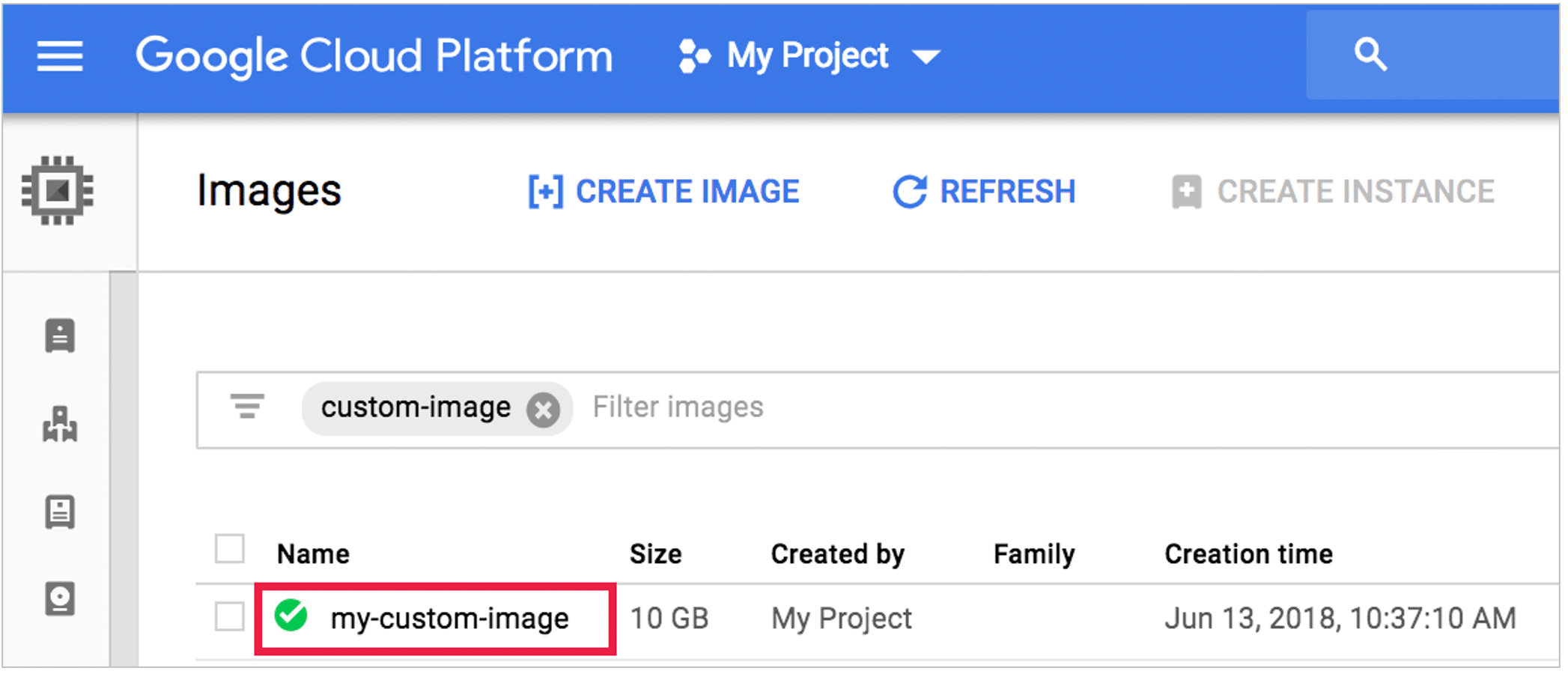

3. Specify Your Dataproc Cluster Image Version

Dataproc uses images to combine Google Cloud Platform connectors, Apache Spark, and Apache Hadoop components in a single package that’s deployable in a Dataproc cluster. It’s best practice to specify an image version because it further customizes the software stack during cluster creation to improve overall deployment speed.

4. Be Prepared for Errors to Occur

While we’d like to think they’ll never happen, errors will occur. You can cut troubleshooting time and improve performance by being prepared. If an error does occur on Google Dataproc, check for information in your cluster’s staging bucket first. It can also pay off to simply restart a job that failed. Unlike legacy on-prem environments where it was better to spend time troubleshooting, at times it’s quicker to restart a failed job running on Dataproc. One quick tip: Dataproc doesn’t restart jobs at failure on default. To change this, specify the maximum number of retries per hour (max is 10).

5. Keep Updated and Review the Latest Release Notes

Like any cloud environment, Google Dataproc is not static. Dataproc publishes weekly release notes that include all recent changes made to the platform. These changes can be bug fixes, new capabilities, etc. They might release a new feature that will greatly improve your cluster performance, so it’s worth keeping up to date with new releases.

How Pepperdata Helps

Following the best practices listed here is the first step towards getting the most out of your big data workloads running on Google Dataproc. But if you want to get the best performance possible and really cut your Google cloud costs, Pepperdata can help.

Through observability and autonomous optimization, the Pepperdata solution provides the tools you need to cut troubleshooting time by 90%, automatically increase throughput by up to 50%, and recapture resource wastage so you can save on infrastructure costs.

Ready to try it for yourself? Take a self-guided tour of the Pepperdata solution now.